Background

With the advent of the 𝐂𝐨𝐯𝐢𝐝-𝟏𝟗 online education or simply E-Learning has become the new norm. With the onset of remote learning, some of the most popular video-conferencing platforms like Google Meet or Zoom have become part & parcel of every teacher's life. But there is a sharp line of difference between physical & online-based teaching. Improper tackling of so many students online has created new loopholes in the current education system. Facial expressions communicate a lot about a student, more than they care to admit. Whether a student is interested or not in a class, if he’s looking towards what the teacher is teaching - these informations are extremely valuable to the teachers as well as the school/college they are enrolled in.

According to NYT, because of the feeble face-to-face traditional interaction between lecturers and students, the interest in online education has drastically reduced in the last few months for students, globally!

And this is actually a two-way process. Poor response from the bored students does exhaust teachers which overall degrades the overall experience, keeping the future of these young minds at great stake. Upon performing extensive research, we couldn't find any platform/app where this censorious issue is effectively tackled.

We believe that with the power of AI, this can be solved if proceeded creatively. Thus we made EdyfAI!

What is EdyfAI?

EdyFAI is a mobile solution in the form of a web extension. It is a revolutionary tool that helps teachers and students improve the online learning experience for the better. By looking at the expressions of the student throughout the duration of the course, EdyfAI can help you figure out which points of the course were interesting, or which parts confused students the most. It shows which topics made students uninterested and diverted attention to other activities. This in turn can help turn online learning into a fun, interesting & productive experience.

A further explanation of how each of these works can be found in the Engineering section below.

Features

- Live Instructor Dashboard

- Understanding

- Engagement

- Enjoyment

- Heart Rate monitoring

- Text to Speech interaction

- Analysis Report

Engineering

So, how everything works?

How we made it?

EdyfAI is crafted with ❤️. EdyfAI is primarily a Javascript-based Webapp, running on Tensorflow.js which segments the face under the hood via Affectiva-API & face-api.js and tracks the user's face providing a graphed feedback of the whole session segmented upon various factors. Intel's Heartbeat.js was used to monitor the user's heartbeat during sessions. Moreover, it's also fitted with Speech-to-Text API for voice interaction. We are using a few 3rd party API's like plotly.js for rendering the plots on the feedback modal. Apart from the OAuth, there is no backend, i.e. all of the computations are done on the client-side itself. The model loads for once & is stored in localstorage which enables it to work offline too & ensures 100% GDPR compatibility offering seamless user privacy! The application is deployed on a free tier of Netlify.

Design

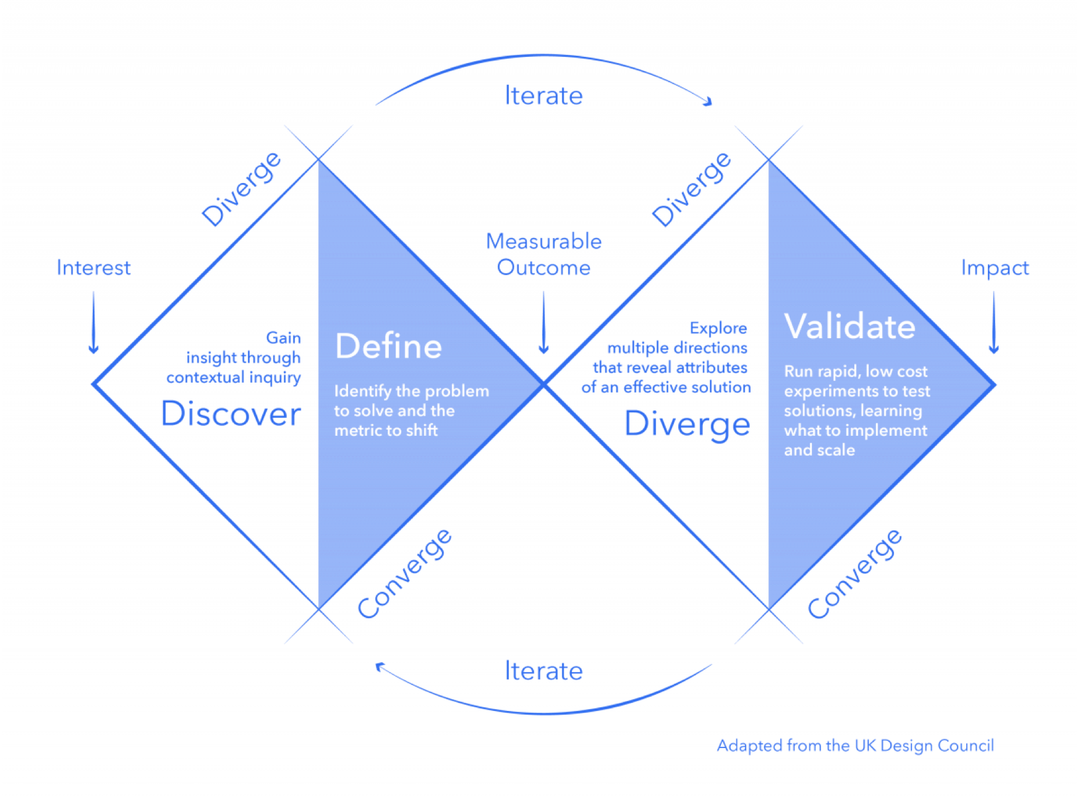

We were heavily inspired by the revised version of Double Diamond design process developed by UK Research Council, which not only includes visual design, but a full-fledged research cycle in which you must discover and define your problem before tackling your solution.

- Discover: a deep dive into the problem we are trying to solve.

- Define: synthesizing the information from the discovery phase into a problem definition.

- Develop: think up solutions to the problem.

- Deliver: pick the best solution and build that.

This time went for the exotic Glassmorphism UI. We utilized design tools like Figma & Photoshop to prototype our designs before doing any coding. Through this, we are able to get iterative feedback so that we spend less time re-writing code.

Research

GoEmotions: A Dataset of Fine-Grained Emotions, ACL 2020 : https://arxiv.org/pdf/2005.00547v2.pdf

Real-time Convolutional Neural Networks for Emotion and Gender Classification : https://arxiv.org/pdf/1710.07557v1.pdf

Heart Rate Measurement Using Face Detection in Video, IEEE 2019 : https://ieeexplore.ieee.org/document/8484779

♣ Datasets :-

- MIMIC II Dataset

- Facial emotion recognition

♣ Articles :-

- Disadvantages of E-learning : https://e-student.org/disadvantages-of-e-learning/

- Current situation of E-Learning : https://bit.ly/3brQCAY

- Learning Online: Problems and Solutions : https://uni.cf/3ygXE5l

- Nearly Half of Students Distracted by Technology : https://bit.ly/3flfg7o

Credits

- Design Resources : Dribbble

- Icons : Icons8

Takeaways

Challenges we ran into

A lot! As I previously mentioned, the whole execution was done from scratch, even the advent of idea in our mind literally came during the opening ceremony from Sandipan. Initially, we were facing some issues while training the model on our system, including underfitting errors as we had to reduce the dataset parameters to optimize it so that it can run seamlessly on low-end devices. Also, it was a bit difficult for us to collaborate in a virtual setting but we somehow managed to finish the project on time.

What We Learned

Proper sleep is very important! :p Well, a lot of things, both summed up in technical & non-technical sides. Also not to mention, we enhanced our googling and Stackoverflow searching skill during the hackathon :)

Accomplishments

A lot! In order for the EdyfAI to work, there were a lot of features that needed to be implemented. Apart from setting up the front end, we had to leverage a lot of extra hours to redefine the Backend. Over the past weekend, we learned a lot about new web technologies and libraries that we could incorporate into our project to meet our unique needs.

We explored so many things within 36 hours. It was a tad difficult for us to collaborate in a virtual setting but we are proud of finishing the project on time which seemed like a tough task initially but happily were also able to add most of the concepts that we envisioned for the app during ideation. Lastly, we think the impact our project could have is a significant accomplishment. Especially, trailing the current scenario of COVID19, this could really be a product that people find useful!

This project was especially an achievement for us because this time the experience was very different than what we have while building typical hackathon projects, which also includes heavy brainstorming, extensive research, and yes, hitting the final pin on the board.

What's next for EdyfAI

Finish the unfinished ones, which includes adding support to Google Sheets, integrating native dashboard followed by improvements in the model. Later, the code might be refactored for adding responsiveness & support for mobile devices. Apart from fine-tuning the project, we're also planning to add various filters and add custom support for Zoom / Google Meet, etc. Moreover, a lot of code needs to be refactored as we couldn't hit so much under 36 hrs. Overall, we hope that one day this project can be widely used globally to redefine the existing & remove the backlogs.

Built With

- affectiva-api

- ai

- bootstrap

- heartbeat.js

- html

- javascript

- opencv

- plotly.js

Log in or sign up for Devpost to join the conversation.